System development is becoming increasingly complex as developers strive to balance requirements for higher levels of autonomy and performance with the need to reduce time-to-market and cost. Picking the right infrastructure software can help accomplish both goals.

How do you choose the best software for your project? While there are a number of evaluation criteria, developers risk the ‘buyer beware’ factor in choosing the software based solely on performance data. You could compare the approach of picking a middleware solution based solely on performance data to choosing a car for its high horsepower number on the spec sheet. This evaluation criteria is limited and can lead to mistakes.

The horsepower value is definitely important, but it has to be taken into context: Can you really compare it to what other car manufacturers claim? Under what conditions was the horsepower value measured? Is horsepower the main consideration if the car needs to be driven over treacherous terrain? The buyer needs to consider all this, as well as safety, suspension, mileage, comfort, manufacturer reputation and even cargo space in order to make the right decision.

Back to choosing a middleware: Once we focus on performance as a top decision criteria, it’s important to note that "performance" is not a single metric but a collection of parameters including latency, throughput, number of applications, CPU speed, number of cores, batching, reliability and security. Not all of these will be equally weighted for the specific use case.

A single-metric performance graph will always provide incomplete information. And if you are getting the numbers from a third party, you are likely to receive only the information they want you to see. It is important to understand the test conditions to ensure they apply to your system before you factor the data into your decision-making process. Many tests are performed in a controlled lab environment, with powerful dedicated machines and high bandwidth over dedicated networks that won’t represent the real-world conditions of your system. The relevant information comes from the testing procedures that accurately measure what is most critical for your project, using tests that mirror your real-world environment.

This blog post can help you better understand how performance tests work, so that you can apply this information to future decisions using performance data.

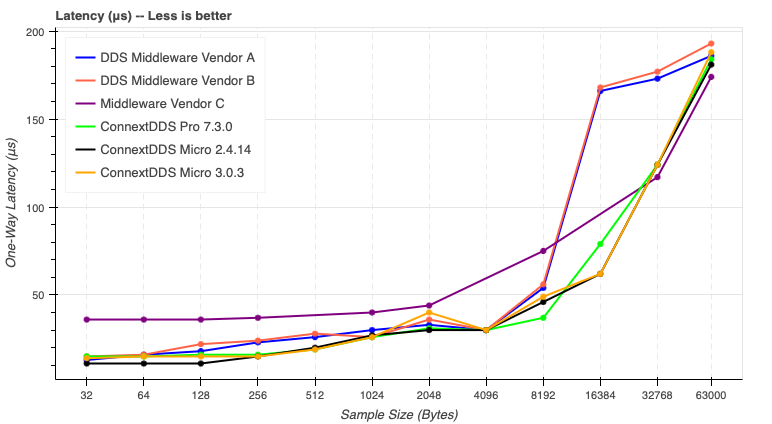

First, let’s look at some basic tests. Your project likely has some minimum latency and throughput requirements that you need to meet, and in most systems you may need to trade off and balance between the two. Figure 1 shows a minimum latency test for a range of different data sizes comparing a few different Data Distribution Service (DDS™) implementations, including open source and RTI Connext®. This illustrates Best Effort data delivery:

Figure 1: Latency vs. sample size

In Figure 1, all DDS implementations perform similarly in sample sizes up to 1KB. Once the sample size increases to between 8KB and 32KB, there is a substantial latency difference between the open source implementations and Connext. For a sufficiently mature technology with healthy competition, a simple test of all the competing products will perform nearly identically. This is true of DDS today. For 80% of applications where DDS is the right solution, any DDS you choose will have good enough performance to meet basic system needs.

Yet that isn’t the whole story. Let’s look at the time for a system to have complete discovery for various middleware implementations (not just DDS-based ones) commonly used by the ROS 2 communication layer.

In this test we create multiple applications, each containing a single DDS Participant with a single Endpoint. For the most common use cases under 25 or so endpoints, all DDS software performs similarly in terms of Discovery Time. This might be due to the fact that the network is not being stressed out and the applications are barely using the CPU of the machines where they run. Based on the data up to here, it would be difficult to choose a DDS implementation, as they all might check your threshold criteria. It is only when we start to increase stress on the system that we start seeing the different trends:

Figure 2: Time to Complete Discovery vs. Number of Endpoints (Less is better).

Zooming out to 350 endpoints, we see how the discovery time for one of the middleware options (not a DDS implementation) cannot scale well and increases exponentially.

Figure 3: Time to Complete Discovery vs. Number of Endpoints

And if we keep zooming out to 800 endpoints, only RTI Connext Pro keeps discovery within a reasonable time, while discovery time for all the other options cannot scale well and increases exponentially (see Figure 4).

Figure 4: Time to Complete Discovery vs. Number of Endpoints, zooming out to 800 endpoints.

To be clear, it would likely be a poor design choice to have a system with 800 endpoints belonging to 800 participants within one single topic, and this data probably isn’t directly applicable to a typical system. However, RTI prides itself on designing our performance tests to go beyond what any of our customers need and stress the system to the failure point. By testing the extremes of performance, RTI can be confident that the system will perform under exceptional use cases.

So, do we even need performance tests if any option will do? Yes. Just because the system performs correctly for the most common use cases, does not mean it will work for your specific use case. Receiving only 80% of your data on time is unacceptable for a critical system which needs to work 100% of the time. An additional benefit of conducting performance tests is that it keeps technology providers competitive, resulting in improved software and hardware, which helps raise the bar for system implementation in general.

How Does Performance Testing Add Value?

Straight performance should really only be a small part of the selection criteria. The more important question is how to use performance testing and performance data to guide the type of architecture needed, the options for optimizing your system, and the overall suitability of the technology for your particular use case.

At RTI, we take performance very seriously. Our customers count on our software to run their mission-and safety-critical application around the world, some 2,000+ designs across multiple industries. To maintain this bet-your-business trust, RTI runs a performance test lab staffed by a dedicated test team.

Every new release of our product is tested for regressions. The numbers are made public and we expose the hardware used, the configurations and the software used, in order to facilitate independent test replication and verification. While we test for ideal scenarios where the machines are extremely powerful and the network is unlimited, we also test in more constricted scenarios using smaller devices (such as Raspberry Pi boards connected to consumer-grade routers, or AWS instances connected to our lab via a regular ISP). Internally, changes in the critical paths have to go through a set of performance tests that cover Network Performance, Discovery, memory, and even increases in the library sizes.

If performance is important for your system, we encourage you to dig into the numbers and conditions under which the system is tested. Conduct your own testing for the most critical parts of your system. We can help you with that. One of the key tools we use for performance testing is RTI Perftest. This is a free benchmarking tool that is available on the RTI website -- you can download it here.

For additional details on performance numbers and how they relate to your system requirements, please contact your RTI support team or reference this page to learn more about performance testing work.

About the authors:

Bob Leigh, Senior Director of Commercial Markets, RTI

Bob Leigh, Senior Director of Commercial Markets, RTI

Bob has been developing new markets and building technology companies for over 20 years as an entrepreneur and technology leader. After graduating from Queen’s University with a degree in Mathematics & Engineering, he spent his early career in small companies and is the founder of two. At each venture, he led the charge to create new technologies for emerging markets and disruptive applications. Since joining RTI, Bob has led the development of new commercial markets and now leads the Commercial Markets team, driving growth across multiple markets and looking for the next big disruption where RTI can apply its expertise to help solve society’s largest challenges.

Javier Morales Castro, Staff Engineer, RTI

Javier Morales Castro, Staff Engineer, RTI

Javier Morales is the Performance Team lead at RTI, where he has been working for over 10 years. He is the main developer for RTI's performance tools (including RTI Perftest) and benchmarks, and he focuses on middleware optimization and performance-related criteria for the company’s global customer base. Javier studied Telecommunications Engineering at the University of Granada, Spain with a Master degree in Multimedia Data Processing Technologies.

Posts by Tag

- Developers/Engineer (178)

- Technology (78)

- Connext DDS Suite (77)

- News & Events (74)

- 2020 (54)

- Standards & Consortia (51)

- Aerospace & Defense (49)

- Automotive (36)

- 2023 (34)

- 2022 (29)

- IIoT (27)

- Leadership (24)

- 2024 (22)

- Cybersecurity (20)

- Healthcare (20)

- 2021 (19)

- Connectivity Technology (17)

- Military Avionics (15)

- Culture & Careers (14)

- 2025 (13)

- FACE (13)

- Connext DDS Pro (10)

- JADC2 (10)

- ROS 2 (10)

- Connext DDS Tools (7)

- Connext DDS Micro (6)

- Databus (6)

- Transportation (5)

- Case + Code (4)

- Connext DDS (4)

- Connext DDS Cert (4)

- Energy Systems (4)

- FACE Technical Standard (4)

- Oil & Gas (3)

- RTI Labs (3)

- Research (3)

- Robotics (3)

- #A&D (2)

- Connext Conference (2)

- Edge Computing (2)

- MDO (2)

- MS&T (2)

- TSN (2)

- ABMS (1)

- AI (1)

- C4ISR (1)

- DOD (1)

- ISO 26262 (1)

- L3Harris (1)

- LabView (1)

- MOSA (1)

- MathWorks (1)

- National Instruments (1)

- Simulation (1)

- Tech Talks (1)

- UAM (1)

- Videos (1)

- eVTOL (1)

Success-Plan Services

Success-Plan Services Bob Leigh and Javier Morales

Bob Leigh and Javier Morales