4 min read

Kubernetes Explained: How It Can Improve Software Delivery in Large-Scale DDS Systems

Kyoungho An

:

May 21, 2020

Kyoungho An

:

May 21, 2020

Scalable Software Delivery for Your DDS Systems - Part 1 of 2

There’s one thing that system administrators can all agree on: Deploying and managing large-scale distributed systems is complex. And if you have hundreds of applications, trying to do everything manually would not work. So, to overcome this problem, container technologies have been widely adopted for large-scale distributed systems across a variety of industries.

A container is a unit of software deployment that packages an application and its dependencies. Container technologies could be useful for Data Distribution Service™ (DDS) systems, especially if you have a large-scale system and need to remotely deploy, update and scale it. Here at RTI, we have been exploring Docker and Kubernetes for quite some time now.

As part of our efforts within the RTI Research Team, I have also been evaluating the performance of DDS within a Kubernetes cluster in an effort to help determine whether there are any issues that customers should be aware of. In this first installment of my two-part blog, I will talk about what Kubernetes is and how it is relevant to DDS.

What is Kubernetes?

Kubernetes (k8s) is an orchestration platform for containerized applications. What is an orchestration platform? An orchestration platform is a set of services to help deploy and manage distributed nodes and applications. Specifically, it helps manage distributed applications by scaling them up and down, performing updates and rollbacks, self-healing, etc. Presently, k8s is the de-facto standard for container orchestration -- it is an open source project developed by Google, and it’s currently managed by the Cloud Native Computing Foundation (CNCF).

You may have heard about Docker. How is k8s related to Docker? k8s and Docker are complementary technologies. Docker is currently the most widely adopted container engine technology available, so it is quite common to develop and package with Docker and then use k8s to manage those containers.

Kubernetes Architecture

To use k8s, you first need to set up your k8s cluster, or you will need access to a cluster if you already have one. If you do not have a cluster, please check out kubeadm for setting up a distributed cluster, or minikube for setting it up locally. It is pretty easy to do with these tools.

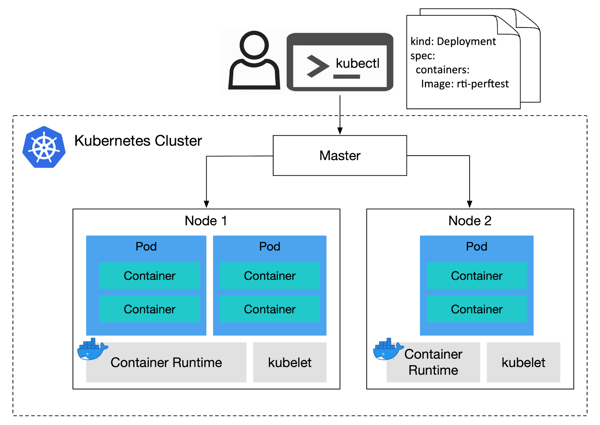

What does a k8s cluster look like? As depicted in Figure 1, each k8s cluster is made up of at least one master and multiple worker nodes. The master works as the control plane of the cluster. Each worker node manages one container runtime, such as Docker; it handles the container's lifecycle operations, such as pulling container images, starting and stopping containers. Every worker node also has an agent called kubelet that communicates with the master for container orchestration.

Once you have your k8s cluster up and running, you can deploy containers by sending k8s manifest files to the master via kubectl, a k8s command line tool.

Figure 1. Kubernetes Concepts and Architecture

Kubernetes Networking

Now, let me explain a little bit about the networking model of k8s as this would be the most relevant topic to DDS. K8s uses Docker as a default container engine, but its approach to networking is different from what Docker does by default.

k8s introduced a new concept called “pod” as its deployable unit. A pod is a collection of one or more containers with shared storage/network. Every pod gets its own directly accessible IP address, and therefore you don’t need to deal with mapping ports between containers and a host like you need to do with Docker.

This networking model creates a clean, backward-compatible model where pods can be treated much like physical hosts. The networking model of Kubernetes imposes the following fundamental requirements:

- All containers can communicate with all other containers without Network Address Translation (NAT).

- All nodes can communicate with all containers (and vice-versa) without NAT.

- The IP that a container sees itself as is the same IP that others see it as.

Lessons Learned

The k8s networking model is a better fit for DDS than Docker alone. DDS participants exchange their IP addresses for peer-to-peer communications, and therefore DDS works better over a network without NAT.

DDS discovery service is pretty useful for k8s. Pods have unreliable IP addresses, as their IP addresses are dynamically assigned when created. Because of that, pods are typically stitched to a “k8s service” that has a reliable IP address and a DNS name. Then, a k8s service load balances network traffic for the stitched backend pods. With DDS discovery service, you do not need a k8s service because DDS pods can discover and establish connections with each other by topics, abstracting IP-based communications. This will allow DDS pods to discover and communicate without a k8s service, resolving the IP unreliability issue.

k8s provides a nice set of features for deploying, updating, scaling and self-healing your distributed applications. Though this is true, it can be difficult to fully understand and leverage all the features. Do we really need k8s for managing DDS applications? I would say it is not needed for every system. But it is definitely worth considering it if your system is:

- Large-scale with hundreds of nodes and applications.

- Leveraging containers for packaging your applications.

- Requiring auto-scaling and self-healing capabilities.

One issue with using k8s' self-healing is that it can take as much as a minute or more to detect and launch a new container. This recovery time would not impact clustered stateless applications, but it could severely affect stateful applications. As part of our current efforts in RTI's Research Team, we have been looking at mechanisms to support self-healing for critical applications that could potentially accelerate recovery time to under 100 milliseconds.

In Part II of this blog, I share specific instructions and configurations for deploying DDS applications in a k8s cluster with RTI PerfTest as an example. If you are interested in this topic, please check it out!

About the author

Kyoungho An is a Senior Research Engineer at Real-Time Innovations (RTI). He has 10 years of experience with distributed real-time embedded systems. His research interest includes publish/subscribe middleware, and deployment and monitoring of distributed systems. He has been leading several DOD and DOE funded research projects as a principal investigator. He has published research papers in journals and conferences focusing on distributed event-based systems, middleware, and cyber-physical systems. He holds a Ph.D. in Computer Science from Vanderbilt University.

Kyoungho An is a Senior Research Engineer at Real-Time Innovations (RTI). He has 10 years of experience with distributed real-time embedded systems. His research interest includes publish/subscribe middleware, and deployment and monitoring of distributed systems. He has been leading several DOD and DOE funded research projects as a principal investigator. He has published research papers in journals and conferences focusing on distributed event-based systems, middleware, and cyber-physical systems. He holds a Ph.D. in Computer Science from Vanderbilt University.

Posts by Tag

- Developers/Engineer (180)

- Technology (79)

- Connext Suite (77)

- News & Events (75)

- 2020 (54)

- Aerospace & Defense (53)

- Standards & Consortia (51)

- Automotive (38)

- 2023 (34)

- 2022 (29)

- IIoT (27)

- 2025 (25)

- Leadership (24)

- Healthcare (23)

- 2024 (22)

- Connectivity Technology (21)

- Cybersecurity (20)

- 2021 (18)

- Culture & Careers (15)

- Military Avionics (15)

- FACE (13)

- Connext Pro (10)

- JADC2 (10)

- ROS 2 (10)

- Connext Tools (7)

- Connext Micro (6)

- Databus (6)

- Transportation (5)

- Case + Code (4)

- Connext (4)

- Connext Cert (4)

- Energy Systems (4)

- FACE Technical Standard (4)

- AI (3)

- Oil & Gas (3)

- Research (3)

- Robotics (3)

- Connext Conference (2)

- Edge Computing (2)

- Golden Dome (2)

- MDO (2)

- MS&T (2)

- RTI Labs (2)

- TSN (2)

- ABMS (1)

- C4ISR (1)

- DOD (1)

- ISO 26262 (1)

- L3Harris (1)

- LabView (1)

- MOSA (1)

- MathWorks (1)

- National Instruments (1)

- Simulation (1)

- Tech Talks (1)

- UAM (1)

- Videos (1)

- eVTOL (1)

Success-Plan Services

Success-Plan Services